Computable Graphs: Difference between revisions

No edit summary |

|||

| (16 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

{{Group | {{Group | ||

|Decription=How to ground knowledge graphs (that can be used for prediction or computational simulation experiments and models) in the discourse and quantitative evidence in scientific literature? | |Decription=How to ground knowledge graphs (that can be used for prediction or computational simulation experiments and models) in the discourse and quantitative evidence in scientific literature? | ||

|Topics=Knowledge Graphs | |||

|Projects=Synthesis center for cell biology, Translate Logseq Knowledge Graph to Systems Biology Network Diagrams | |||

|Discord Channel Name=#computable-graphs | |||

|Discord Channel URL=https://discord.com/channels/1029514961782849607/1038983137222467604 | |||

|Facilitator=Joel Chan | |||

|Members=Aakanksha Naik, Akila Wijerathna-Yapa, Joel Chan, Matthew Akamatsu, Michael Gartner, Dafna Shahaf, Belinda Mo | |||

}} | }} | ||

Facilitator/Point of Contact: [[Has Facilitator::Joel Chan]] | |||

== What == | |||

How to ground knowledge graphs (that can be used for prediction or computational simulation experiments and models) in the discourse of evidence in scientific literature? How to transition from unstructured literature to knowledge graphs and keep things updated with appropriate provenance for (un)certainty? | |||

== Discussion entry points == | |||

* [[Matthew Akamatsu]] https://discord.com/channels/1029514961782849607/1033091746139230238/1040212464631029822 | |||

== Resources == | |||

* [[Simularium]] - https://simularium.allencell.org/ | |||

* [[Vivarium Collective]] - https://vivarium-collective.github.io/ | |||

=== First breakout group session === | |||

who was present: Joel, Matt, Michael, Sid, Aakanksha, Belinda | |||

* anchoring on [[Synthesis center for cell biology]] | |||

* challenge: students wrapping their heads around the model; get really excited once they do | |||

* Dafna: very much like the old issue-based argument maps! | |||

* Belinda: what is scope of the project in terms of users? | |||

** Matt: hoping this accessible all the way to high school students also! | |||

* AICS is making platform for running simulations, make it easy to run and share with others - [[Simularium]] | |||

* Dafna: are there pain points on the input side? (seems painful!) | |||

* Dafna: how similar are the things that people pull out from the same paper? | |||

** Matt: for our field, pretty similar, esp. for well-written papers | |||

** Belinda: compare what each person is highlighting and extract summary that is representative of all the annotations | |||

* Dafna: how useful are these (bits of) knowledge graphs for others? | |||

** Works well within lab; better than unstructured text, motivating to try to create micropublications to summarize outcome 10-week rotation | |||

* Dafna: "compiling" discourse graph to manuscript seems much easier, esp. if have consistent structure and human-in-the-loop | |||

** Similar to brainstorming discussion/ethics statements for a paper given abstract (via GPT-3 | |||

* Belinda: how much variation in paper structure within your field? | |||

** some variations by journal | |||

** some authors (think more highly of themselves), more declarative/general, less clear distinction between claims and evidence | |||

* Aakanksha: | |||

** hypothes.is experiment | |||

** need infra changes | |||

*** help make the case for these changes | |||

**** maybe how much $$ each person would pay for this!!) | |||

**** demonstration of value | |||

**** brainstorming what research projects would be part of this | |||

** | |||

emerging themes/problems: | |||

* idea around changing the reading process somehow (with high hopes for somehting like semantic scholar PD reader that has beginning annotations tuned to what matt is trying to extract, maybe also on the abstract level) --> these could also feed back / forward to other users | |||

** can probably start from this: Scim: Intelligent Faceted Highlights for Interactive, Multi-Pass Skimming of Scientific Papers <nowiki>https://arxiv.org/pdf/2205.04561.pdf</nowiki> | |||

** cross connections to what | |||

* idea of compiling from discourse graph to manuscript seems feasible? | |||

** Belinda could mock something up in OpenAI playground really quickly for a few papers | |||

*** need examples or access to repo | |||

** Could also integrate into Matt's lab via GPT-3 extension | |||

* question: understanding different levels of value of having a knowledge graph for someone else who didn't create it | |||

** --> Joel can add links to ongoing lit review on this question - not resolved yet | |||

* theme/idea: "compiling" from discourse to knowledge graphs | |||

** Aakanksha: often see ontologies in isolation | |||

*** Aakanksha: can look into NLP around adding context to knowledge graphs | |||

** Michael: interesting to think about the user experience on this - how do they interact? | |||

** Grounding abstractions: https://www.susielu.com/data-viz/abstractions | |||

== Contextualizing knowledge graphs == | |||

=== Key Question: Research connections between knowledge base provenance and NLP === | |||

==== Background ==== | |||

Knowledge bases or graphs are typically stored in the form of subject-predicate-object triples, often using formats like RDF (Resource Description Format) and OWL (Web Ontology Language). These triple-based formats don’t include provenance data, which covers information such as the source from which a triple was extracted, time of extraction, modification history, evidence for the triple, etc., or any other additional context by default. But contextual information can improve overall utility of a KG/KB, and is particularly helpful to capture when scholars are synthesizing literature into such structures (either individually or collectively). | |||

==== What can additional context help with? ==== | |||

* Authority or trustworthiness of information | |||

* Transparency of the process leading to the conclusion | |||

* Validation and review of experiments conducted to attain a result | |||

Property graphs/attribute graphs in which entities and relations can have attributes | |||

==== Ways to insert additional context into a KG ==== | |||

Broadly can be distilled into the following strategies: Increasing number of nodes in a triple, allowing “nodes” to be triples themselves, adding attributes or descriptions to nodes, adding attributes to triples (attributes may or may not be treated the same as predicates) | |||

RDF reification: Add additional RDF triples that link a subject, predicate or object entity with its source (or any other provenance attribute of interest). Leads to bloating - 4x the number of triples if you want to store information about subject, predicate, object and overall triple. | |||

N-ary relations: Instead of binary relations, allow more entities (aside from subject and object) to be included in the same relation. This can complicate traversal of the knowledge graph. | |||

Adding metadata property-value pairs to RDF triples | |||

Forming RDF molecules, where subjects/objects/predicates are RDF triples (allowing recursion) | |||

Two named graphs, default graph and assertion graph, store data and another graph details provenance data | |||

Nanopublications, which seem very similar in structure to the discourse graph format | |||

Most of these representations are text-only, what about multimodal provenance (images, toy datasets, audio and video recordings)? | |||

==== At what levels can we have additional context? ==== | |||

[[/link.springer.com/content/pdf/10.1007/s41019-020-00118-0.pdf|[1]]] provides a review (slight focus on cybersecurity domain) and suggests the following granularities at which provenance/context information can be recorded: | |||

Element-level provenance (how were certain entity or predicate types defined) | |||

Triple-level provenance (most common level of provenance) | |||

Molecule-level and graph-level provenance (having provenance information for composites or collections of RDF triples, but not individual triples) | |||

Document-level provenance and corpus-level provenance (only having provenance information for documents or corpora from which triples are identified) | |||

Scalability is a concern when deciding the level and extent to which provenance data can be retained | |||

==== What kinds of information does provenance cover? ==== | |||

Domain-agnostic information types: | |||

* Creation of the data | |||

* Publication of the data | |||

* Distribution of the data | |||

* Copyright information | |||

* Modification and revision history | |||

* Current validity | |||

Domain-specific provenance ontologies: defined for BBC, US Government, scientific experiments | |||

DeFacto tool for deep fact validation on the web: [[/github.com/DeFacto/DeFacto|https://github.com/DeFacto/DeFacto]] | |||

==== [[question]] What additional context beyond provenance could be useful for scholarly knowledge graphs specifically? ==== | |||

* Concept drift over time (in general entity/relation evolution over time) | |||

* Seems like discourse links across papers don’t neatly fit the provenance definition, but are an aspect we want to capture? | |||

* Are there other aspects that provenance doesn’t cover? | |||

* Some key pointers in Bowker (2000): https://joelchan.me/INST801-FA22%20Readings/Bowker_2000_Biodiversity%20Datadiversity.pdf | |||

==== Links to NLP research ==== | |||

Seems like there's a bit of a gap between NLP research | |||

[[question]] What are the points of overlap between subproblems around scholarly knowledge graph provenance and existing NLP research? | |||

(probably a rich opportunity for a position paper - could bei nteresting to NLP venues; e.g., [[NeuRIPS AI for science workshop]]: possible contact: Kexin Huang | |||

* Biggest open questions seem to be: | |||

** scalability vs expressivity, multiple modalities, updation | |||

** Interpretability or transparency: Provenance information can be used to make KGs more transparent, because we have sources that provide evidence for why this fact/relation holds true. | |||

** Commonsense angle: Another broader application where storing context might be helpful could be for commonsense knowledge graphs? | |||

*** Might have an analog in terms of "scholarly" (un)common sense, esp. across disciplinary boundaries (but this also has analogs in cross-cultural barriers to communication) | |||

* Maps to things with some existing body of work | |||

** Scoring functions in KRL: In representation learning for KGs, models are trained to embed entities and relations in a common space and then score (subject, predicate, object) triples according to likelihood - can these scores be use as additional “plausibility” or “validity” metadata from a provenance perspective? However, these scores are usually produced by models that are learning to replicate the structure of the knowledge graph, so they can’t link back to any textual evidence to support the judgements. | |||

** Auxiliary knowledge in graphs: [https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9416312 <nowiki>[2]</nowiki>] lists some work that stores textual descriptions alongside entities, entity images (image-embodied IKRL) and uncertainty score information (ProBase, NELL and ConceptNet). | |||

** Temporal knowledge graphs: [2] is a survey of knowledge graphs from an NLP perspective and highlights temporal knowledge graphs as a key area of research. Temporality is one kind of provenance information. Current directions being explored include | |||

*** Temporal embeddings: extending existing KRL methods for quadruples instead of triples, where time is the fourth entity | |||

*** Entity dynamics: changes in entity states over time | |||

*** Temporal relational dependence (e.g., a person must be born before dying) | |||

*** Temporal logic reasoning: graphs in which temporal information is uncertain. | |||

** Knowledge acquisition: Some work has tried to explore jointly extracting entities/relations from unstructured text and performing knowledge graph completion. This can be a useful way to jointly model unstructured text from previous papers that do not have associated graphs and papers for which discourse graphs have been annotated. In general, joint graph+text embedding techniques seem useful. Additionally, a technique often used to train models to extract relations from text, distant supervision, can probably be leveraged to detect and add provenance information post-hoc. The idea behind distant supervision is to scan text and find sentences that mention both entities involved in the relation, considering such sentences to be potential evidence sentences. But this is a noisy heuristic - a sentence mentioning two connected entities may not be mentioning the relation between them - and will likely require some curation. | |||

[[/link.springer.com/chapter/10.1007/978-3-642-13818-8 32|Application to finding evidence for clinical KBs]] | |||

==== Important contexts to be captured for synthesis of scientific literature ==== | |||

[[/dl.acm.org/doi/pdf/10.1145/3227609.3227689|[3]]] proposes a framework to construct a knowledge graph for science. Problems, methods and results are key semantic resources, in addition to people, publications, institutions, grants, and datasets. Four modes of input: | |||

# Bridge existing KGs, ontologies, metadata and information models | |||

# Enable scientists to add their research possibly with some intelligent interface support | |||

# Use automated methods for information extraction and linking | |||

# Support curation and quality assurance by domain experts and librarians | |||

The underlying data model that supports this endeavor can adopt RDF or Linked Data as a scaffold but must add comprehensive provenance, evolution and discourse information. Other requirements include ability to store and query graphs at scale, UI widgets that support collaborative authoring and curation and integration with semi-automated ways of extraction, search and recommendation. | |||

Top-level ontology: research contribution, communicates one or more results in an attempt to address a problem using a set of methods. Top-down design of more specific elements is needed from domain experts across a set of domains. | |||

Infrastructure: KG can be queried and explored by anyone, but registration is required to contribute to the KG (a way to ensure proper authorization and domain expertise?) | |||

KGs need to capture fuzziness: competing evidence, differing definitions and conceptualizations | |||

Interesting question around score for contributions to the scientific KG instead of document-centric metrics like h-indices (potentially more accurate assessment of contributions) | |||

[https://www.researchgate.net/profile/Soeren-Auer/publication/330751750_Open_Research_Knowledge_Graph_Towards_Machine_Actionability_in_Scholarly_Communication/links/5c5e9bc7a6fdccb608b28f6f/Open-Research-Knowledge-Graph-Towards-Machine-Actionability-in-Scholarly-Communication.pdf <nowiki>[4]</nowiki>] has a really nice analogy: when we moved from phone books and maps were digitally transformed, we didn’t just created PDF books with this information, instead we developed new means to organize and access information. Why not push for such a re-organization in scholarly communication too? Building on [3], their ontology also contains: problem statement, approach, evaluation and conclusions. They use RDF with a minor difference: everything can be modeled as an entity with a unique ID. Limited subset of OWL (subclass inference) is supported. Small user study with this tool, but it doesn’t seem like Dafna’s question about utility of KG constructed by someone else or consensus across people were measured. But they do speak to Dafna's question about how painful it is to construct KGs. | |||

so possible good open [[question]]: how might KGs constructed by one set of scientists actually be useful or not for other scientists, and what factors influence whether this is the case? | |||

* [[experiment]] idea: even just starting by compiling any successful instances of adoption of KGs taht span multiple groups would be a good thing to do; [[Peter Murray-Rust]]. maybe [https://www.nlm.nih.gov/research/umls/index.html UMLS] also | |||

Note: Both papers actually reference a fair number of other works in this direction, which could be good follow-up reading | |||

=== Papers I didn’t get to === | |||

(numbering here does *not* map to numbering in sections above) | |||

[1] [https://pdfs.semanticscholar.org/713b/b398b85b034f2139e08b4ca0f7791fd545bc.pdf?_ga=2.19474043.2123037593.1668226180-648530244.1660769576 Empirical study of RDF reification] | |||

[2] [[/dl.acm.org/doi/pdf/10.1145/3459637.3482330|Maintaining provenance in uncertain KGs]], [[/arxiv.org/pdf/2007.14864.pdf|maintaining provenance in dynamic KGs]] | |||

[3] [[/kaixu.files.wordpress.com/2011/09/provtrack-ickde-submission.pdf|System for provenance exploration]] | |||

[4] [[/www.semanticscholar.org/paper/iKNOW:-A-platform-for-knowledge-graph-construction-Babalou-Costa/3a492a7b2d04221941919f80ca259506866490b3|iKNOW: KG construction for biodiversity]] | |||

[5] [[/eprints.soton.ac.uk/412923/1/WD sources iswc 7 .pdf|Provenance information in a collaborative environment: evaluation of Wikipedia external references]] | |||

[6] [[/arxiv.org/pdf/2210.14846.pdf|ProVe: Automated provenance verification of KGs against text]] | |||

== Understanding knowledge graph transfer == | |||

I was able to track down my main notes/sources from before and clean them up a little (not *that* much effort :)): | |||

Last version (not most updated, bc export is slooowwwwww from Roam rn) here: https://publish.obsidian.md/joelchan-notes/discourse-graph/questions/QUE+-+How+can+we+best+bridge+private+vs.+public+knowledge | |||

Will clean up based on this: https://roamresearch.com/#/app/megacoglab/page/XHvRRSi_0 | |||

TL;DR (can clean up here based on [[Discourse Modeling]] conventions :): | |||

* distributed sensemaking (Kittur, Goyal, etc.; some promise of yes helpful to share) | |||

* sensemaking handoff (limited, but a major focus in intelligence analysis, there are tools and processes for doing this) | |||

* reuse of records/data/stuff in general in CSCW across a bunch of work domains/settings, like aircraft safety, healthcare, etc. (generally talks about why it's hard to reuse stuff from others, need context) | |||

* annotations / social reading (generally negative/mixed) | |||

MG: can we search the literature based on what tool they studied things with? | |||

NO. not right now. | |||

lack of good tools to "refactor" the literature to match those queries | |||

maybe like Elicit? https://elicit.org/search?q=What+is+the+impact+of+creatine+on+cognition%3F&token=01GHRSBKG6P1R9343NBP2ZWZ5B&c-ps=intervention&c-ps=outcome-measured&c-s=What+tool+did+they+study but for everything?? | |||

== Compiling graphs to manuscripts == | |||

=== Overview === | |||

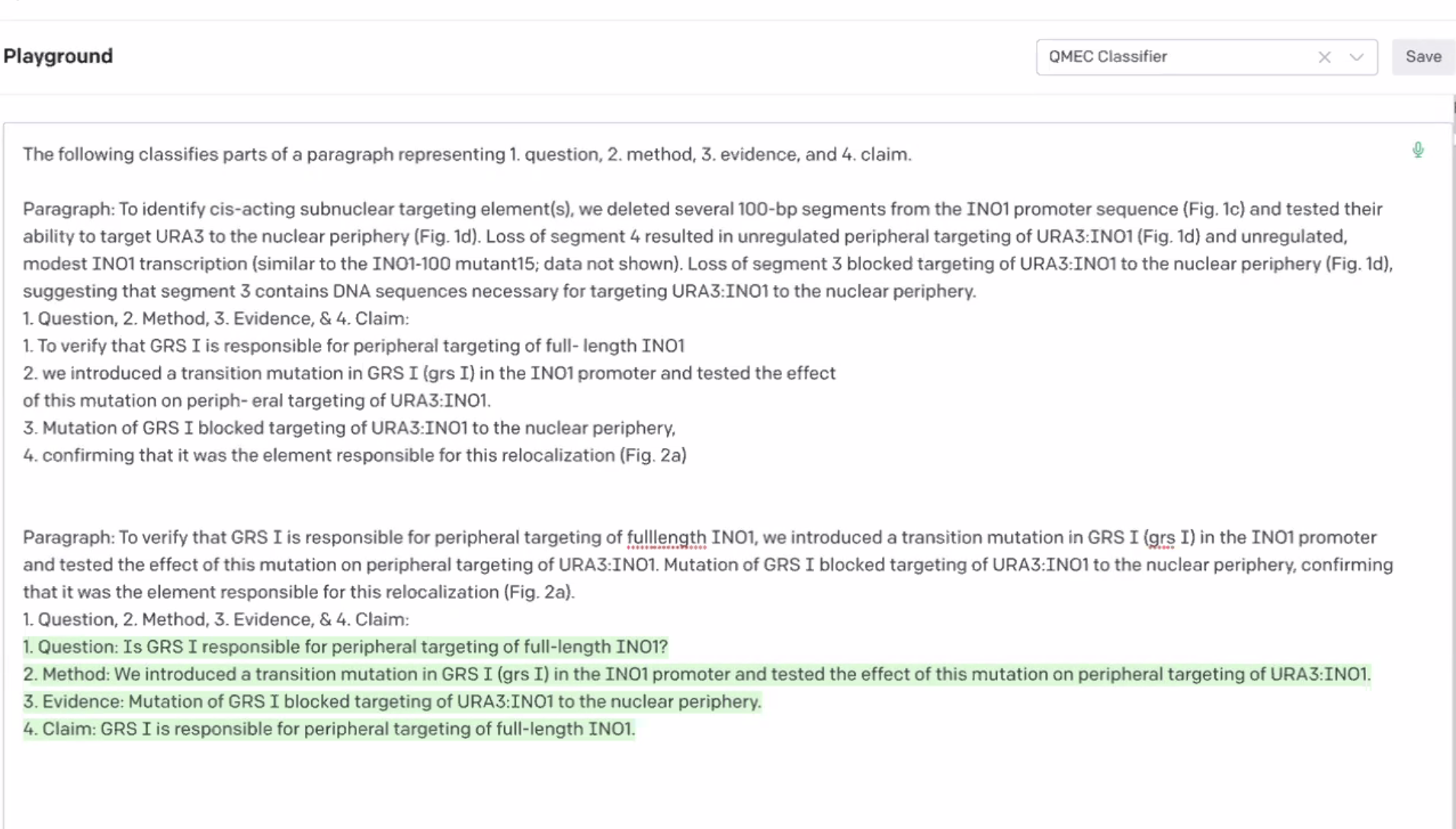

We'd like to build a prototype of a tool that starts with discourse units (phrases with the category Question/motivation, Method, Evidence, Claim) and uses NLP to generate a draft of a manuscript paragraph. | |||

[[File:Discourse graph to manuscript draft via NLP.png|900x900px]] | |||

=== Purpose === | |||

Such a tool would speed up a really time consuming aspect of the academic job: drafting grant proposals and manuscripts from content. It would also encourage researchers to generate structured content (questions/claims/evidence) which will get incorporated into a discourse graph. | |||

The benefit to the user is a structured approach to writing papers and grant proposals. Using the tool will introduce researchers to the concept of discourse units, and generate a repository of discourse units that can be turned into discourse graphs. | |||

=== Ideal outcomes === | |||

(more to add here) | |||

This tool could also enable the micropublication of mini discourse graphs (one question/method/evidence/claim) by generating a draft of the explanatory text. | |||

* Could also integrate into Roam Research graphs, e.g. in Matt's lab, via the Roam GPT-3 extension | |||

=== What we're doing next === | |||

* Belinda could mock something up in OpenAI playground really quickly for a few papers | |||

** need examples or access to repo | |||

* Matt and Michael will share some examples of papers or content from the discourse graph to use as source data | |||

* Sid can check out [https://github.com/LayBacc/roam-ai Roam AI extension] for incorporation into our roam graph workflow (fork, make modifications etc) | |||

** ([https://www.reddit.com/r/RoamResearch/comments/yigf6q/im_genuinely_fascinated_with_the_roam_ai/ discussion on its usage]) | |||

** [https://www.loom.com/share/d152e7a184f94080b8777f595821f43e usage video] | |||

=== Related conversation === | |||

* Dafna: "compiling" discourse graph to manuscript seems much easier, esp. if have consistent structure and human-in-the-loop | |||

** Similar to brainstorming discussion/ethics statements for a paper given abstract (via GPT-3 | |||

* Belinda: how much variation in paper structure within your field? | |||

** some variations by journal | |||

=== Claims in the conversation that need evidence === | |||

* the majority of empirical research papers in biology have a similar structure (question/ motivation/ evidence (fig.1a)/ claim for each paragraph & figure panel) | |||

* multiple researchers (or students) asked to highlight the questions/claims/evidence text from a paper will highlight similar/consensus text (part of the NLP-to-highlights project) | |||

notes/questions | |||

* may need more than just the sentences, but also mapping to intra-project/paper elements, like figures and tables to | |||

[[File:Screenshot example of GPT-3 task for extracting components from narrative.png|none|thumb]] | |||

== Next Steps == | |||

* don't know if a joint project makes sense, but perhaps coordinated first prototypes of a bridge? | |||

could use: | |||

* someone with programming skills to implement a POC translation between a discourse graph and one of the specific modeling languages/ontologies | |||

Latest revision as of 14:18, 18 November 2022

| Computable Graphs | |

|---|---|

| Description | How to ground knowledge graphs (that can be used for prediction or computational simulation experiments and models) in the discourse and quantitative evidence in scientific literature? |

| Related Topics | Knowledge Graphs |

| Projects | Synthesis center for cell biology, Translate Logseq Knowledge Graph to Systems Biology Network Diagrams |

| Discord Channel | #computable-graphs |

| Facilitator | Joel Chan |

| Members | Aakanksha Naik, Dafna Shahaf, Belinda Mo, Akila Wijerathna-Yapa, Matthew Akamatsu, Michael Gartner, Joel Chan |

Facilitator/Point of Contact: Joel Chan

What

How to ground knowledge graphs (that can be used for prediction or computational simulation experiments and models) in the discourse of evidence in scientific literature? How to transition from unstructured literature to knowledge graphs and keep things updated with appropriate provenance for (un)certainty?

Discussion entry points

- Matthew Akamatsu https://discord.com/channels/1029514961782849607/1033091746139230238/1040212464631029822

Resources

- Simularium - https://simularium.allencell.org/

- Vivarium Collective - https://vivarium-collective.github.io/

First breakout group session

who was present: Joel, Matt, Michael, Sid, Aakanksha, Belinda

- anchoring on Synthesis center for cell biology

- challenge: students wrapping their heads around the model; get really excited once they do

- Dafna: very much like the old issue-based argument maps!

- Belinda: what is scope of the project in terms of users?

- Matt: hoping this accessible all the way to high school students also!

- AICS is making platform for running simulations, make it easy to run and share with others - Simularium

- Dafna: are there pain points on the input side? (seems painful!)

- Dafna: how similar are the things that people pull out from the same paper?

- Matt: for our field, pretty similar, esp. for well-written papers

- Belinda: compare what each person is highlighting and extract summary that is representative of all the annotations

- Dafna: how useful are these (bits of) knowledge graphs for others?

- Works well within lab; better than unstructured text, motivating to try to create micropublications to summarize outcome 10-week rotation

- Dafna: "compiling" discourse graph to manuscript seems much easier, esp. if have consistent structure and human-in-the-loop

- Similar to brainstorming discussion/ethics statements for a paper given abstract (via GPT-3

- Belinda: how much variation in paper structure within your field?

- some variations by journal

- some authors (think more highly of themselves), more declarative/general, less clear distinction between claims and evidence

- Aakanksha:

- hypothes.is experiment

- need infra changes

- help make the case for these changes

- maybe how much $$ each person would pay for this!!)

- demonstration of value

- brainstorming what research projects would be part of this

- help make the case for these changes

emerging themes/problems:

- idea around changing the reading process somehow (with high hopes for somehting like semantic scholar PD reader that has beginning annotations tuned to what matt is trying to extract, maybe also on the abstract level) --> these could also feed back / forward to other users

- can probably start from this: Scim: Intelligent Faceted Highlights for Interactive, Multi-Pass Skimming of Scientific Papers https://arxiv.org/pdf/2205.04561.pdf

- cross connections to what

- idea of compiling from discourse graph to manuscript seems feasible?

- Belinda could mock something up in OpenAI playground really quickly for a few papers

- need examples or access to repo

- Could also integrate into Matt's lab via GPT-3 extension

- Belinda could mock something up in OpenAI playground really quickly for a few papers

- question: understanding different levels of value of having a knowledge graph for someone else who didn't create it

- --> Joel can add links to ongoing lit review on this question - not resolved yet

- theme/idea: "compiling" from discourse to knowledge graphs

- Aakanksha: often see ontologies in isolation

- Aakanksha: can look into NLP around adding context to knowledge graphs

- Michael: interesting to think about the user experience on this - how do they interact?

- Grounding abstractions: https://www.susielu.com/data-viz/abstractions

- Aakanksha: often see ontologies in isolation

Contextualizing knowledge graphs

Key Question: Research connections between knowledge base provenance and NLP

Background

Knowledge bases or graphs are typically stored in the form of subject-predicate-object triples, often using formats like RDF (Resource Description Format) and OWL (Web Ontology Language). These triple-based formats don’t include provenance data, which covers information such as the source from which a triple was extracted, time of extraction, modification history, evidence for the triple, etc., or any other additional context by default. But contextual information can improve overall utility of a KG/KB, and is particularly helpful to capture when scholars are synthesizing literature into such structures (either individually or collectively).

What can additional context help with?

- Authority or trustworthiness of information

- Transparency of the process leading to the conclusion

- Validation and review of experiments conducted to attain a result

Property graphs/attribute graphs in which entities and relations can have attributes

Ways to insert additional context into a KG

Broadly can be distilled into the following strategies: Increasing number of nodes in a triple, allowing “nodes” to be triples themselves, adding attributes or descriptions to nodes, adding attributes to triples (attributes may or may not be treated the same as predicates)

RDF reification: Add additional RDF triples that link a subject, predicate or object entity with its source (or any other provenance attribute of interest). Leads to bloating - 4x the number of triples if you want to store information about subject, predicate, object and overall triple.

N-ary relations: Instead of binary relations, allow more entities (aside from subject and object) to be included in the same relation. This can complicate traversal of the knowledge graph.

Adding metadata property-value pairs to RDF triples

Forming RDF molecules, where subjects/objects/predicates are RDF triples (allowing recursion)

Two named graphs, default graph and assertion graph, store data and another graph details provenance data

Nanopublications, which seem very similar in structure to the discourse graph format

Most of these representations are text-only, what about multimodal provenance (images, toy datasets, audio and video recordings)?

At what levels can we have additional context?

[1] provides a review (slight focus on cybersecurity domain) and suggests the following granularities at which provenance/context information can be recorded:

Element-level provenance (how were certain entity or predicate types defined)

Triple-level provenance (most common level of provenance)

Molecule-level and graph-level provenance (having provenance information for composites or collections of RDF triples, but not individual triples)

Document-level provenance and corpus-level provenance (only having provenance information for documents or corpora from which triples are identified)

Scalability is a concern when deciding the level and extent to which provenance data can be retained

What kinds of information does provenance cover?

Domain-agnostic information types:

- Creation of the data

- Publication of the data

- Distribution of the data

- Copyright information

- Modification and revision history

- Current validity

Domain-specific provenance ontologies: defined for BBC, US Government, scientific experiments

DeFacto tool for deep fact validation on the web: https://github.com/DeFacto/DeFacto

question What additional context beyond provenance could be useful for scholarly knowledge graphs specifically?

- Concept drift over time (in general entity/relation evolution over time)

- Seems like discourse links across papers don’t neatly fit the provenance definition, but are an aspect we want to capture?

- Are there other aspects that provenance doesn’t cover?

- Some key pointers in Bowker (2000): https://joelchan.me/INST801-FA22%20Readings/Bowker_2000_Biodiversity%20Datadiversity.pdf

Links to NLP research

Seems like there's a bit of a gap between NLP research

question What are the points of overlap between subproblems around scholarly knowledge graph provenance and existing NLP research?

(probably a rich opportunity for a position paper - could bei nteresting to NLP venues; e.g., NeuRIPS AI for science workshop: possible contact: Kexin Huang

- Biggest open questions seem to be:

- scalability vs expressivity, multiple modalities, updation

- Interpretability or transparency: Provenance information can be used to make KGs more transparent, because we have sources that provide evidence for why this fact/relation holds true.

- Commonsense angle: Another broader application where storing context might be helpful could be for commonsense knowledge graphs?

- Might have an analog in terms of "scholarly" (un)common sense, esp. across disciplinary boundaries (but this also has analogs in cross-cultural barriers to communication)

- Maps to things with some existing body of work

- Scoring functions in KRL: In representation learning for KGs, models are trained to embed entities and relations in a common space and then score (subject, predicate, object) triples according to likelihood - can these scores be use as additional “plausibility” or “validity” metadata from a provenance perspective? However, these scores are usually produced by models that are learning to replicate the structure of the knowledge graph, so they can’t link back to any textual evidence to support the judgements.

- Auxiliary knowledge in graphs: [2] lists some work that stores textual descriptions alongside entities, entity images (image-embodied IKRL) and uncertainty score information (ProBase, NELL and ConceptNet).

- Temporal knowledge graphs: [2] is a survey of knowledge graphs from an NLP perspective and highlights temporal knowledge graphs as a key area of research. Temporality is one kind of provenance information. Current directions being explored include

- Temporal embeddings: extending existing KRL methods for quadruples instead of triples, where time is the fourth entity

- Entity dynamics: changes in entity states over time

- Temporal relational dependence (e.g., a person must be born before dying)

- Temporal logic reasoning: graphs in which temporal information is uncertain.

- Knowledge acquisition: Some work has tried to explore jointly extracting entities/relations from unstructured text and performing knowledge graph completion. This can be a useful way to jointly model unstructured text from previous papers that do not have associated graphs and papers for which discourse graphs have been annotated. In general, joint graph+text embedding techniques seem useful. Additionally, a technique often used to train models to extract relations from text, distant supervision, can probably be leveraged to detect and add provenance information post-hoc. The idea behind distant supervision is to scan text and find sentences that mention both entities involved in the relation, considering such sentences to be potential evidence sentences. But this is a noisy heuristic - a sentence mentioning two connected entities may not be mentioning the relation between them - and will likely require some curation.

Application to finding evidence for clinical KBs

Important contexts to be captured for synthesis of scientific literature

[3] proposes a framework to construct a knowledge graph for science. Problems, methods and results are key semantic resources, in addition to people, publications, institutions, grants, and datasets. Four modes of input:

- Bridge existing KGs, ontologies, metadata and information models

- Enable scientists to add their research possibly with some intelligent interface support

- Use automated methods for information extraction and linking

- Support curation and quality assurance by domain experts and librarians

The underlying data model that supports this endeavor can adopt RDF or Linked Data as a scaffold but must add comprehensive provenance, evolution and discourse information. Other requirements include ability to store and query graphs at scale, UI widgets that support collaborative authoring and curation and integration with semi-automated ways of extraction, search and recommendation.

Top-level ontology: research contribution, communicates one or more results in an attempt to address a problem using a set of methods. Top-down design of more specific elements is needed from domain experts across a set of domains.

Infrastructure: KG can be queried and explored by anyone, but registration is required to contribute to the KG (a way to ensure proper authorization and domain expertise?)

KGs need to capture fuzziness: competing evidence, differing definitions and conceptualizations

Interesting question around score for contributions to the scientific KG instead of document-centric metrics like h-indices (potentially more accurate assessment of contributions)

[4] has a really nice analogy: when we moved from phone books and maps were digitally transformed, we didn’t just created PDF books with this information, instead we developed new means to organize and access information. Why not push for such a re-organization in scholarly communication too? Building on [3], their ontology also contains: problem statement, approach, evaluation and conclusions. They use RDF with a minor difference: everything can be modeled as an entity with a unique ID. Limited subset of OWL (subclass inference) is supported. Small user study with this tool, but it doesn’t seem like Dafna’s question about utility of KG constructed by someone else or consensus across people were measured. But they do speak to Dafna's question about how painful it is to construct KGs.

so possible good open question: how might KGs constructed by one set of scientists actually be useful or not for other scientists, and what factors influence whether this is the case?

- experiment idea: even just starting by compiling any successful instances of adoption of KGs taht span multiple groups would be a good thing to do; Peter Murray-Rust. maybe UMLS also

Note: Both papers actually reference a fair number of other works in this direction, which could be good follow-up reading

Papers I didn’t get to

(numbering here does *not* map to numbering in sections above)

[1] Empirical study of RDF reification

[2] Maintaining provenance in uncertain KGs, maintaining provenance in dynamic KGs

[3] System for provenance exploration

[4] iKNOW: KG construction for biodiversity

[5] Provenance information in a collaborative environment: evaluation of Wikipedia external references

[6] ProVe: Automated provenance verification of KGs against text

Understanding knowledge graph transfer

I was able to track down my main notes/sources from before and clean them up a little (not *that* much effort :)):

Last version (not most updated, bc export is slooowwwwww from Roam rn) here: https://publish.obsidian.md/joelchan-notes/discourse-graph/questions/QUE+-+How+can+we+best+bridge+private+vs.+public+knowledge

Will clean up based on this: https://roamresearch.com/#/app/megacoglab/page/XHvRRSi_0

TL;DR (can clean up here based on Discourse Modeling conventions :):

- distributed sensemaking (Kittur, Goyal, etc.; some promise of yes helpful to share)

- sensemaking handoff (limited, but a major focus in intelligence analysis, there are tools and processes for doing this)

- reuse of records/data/stuff in general in CSCW across a bunch of work domains/settings, like aircraft safety, healthcare, etc. (generally talks about why it's hard to reuse stuff from others, need context)

- annotations / social reading (generally negative/mixed)

MG: can we search the literature based on what tool they studied things with?

NO. not right now.

lack of good tools to "refactor" the literature to match those queries

maybe like Elicit? https://elicit.org/search?q=What+is+the+impact+of+creatine+on+cognition%3F&token=01GHRSBKG6P1R9343NBP2ZWZ5B&c-ps=intervention&c-ps=outcome-measured&c-s=What+tool+did+they+study but for everything??

Compiling graphs to manuscripts

Overview

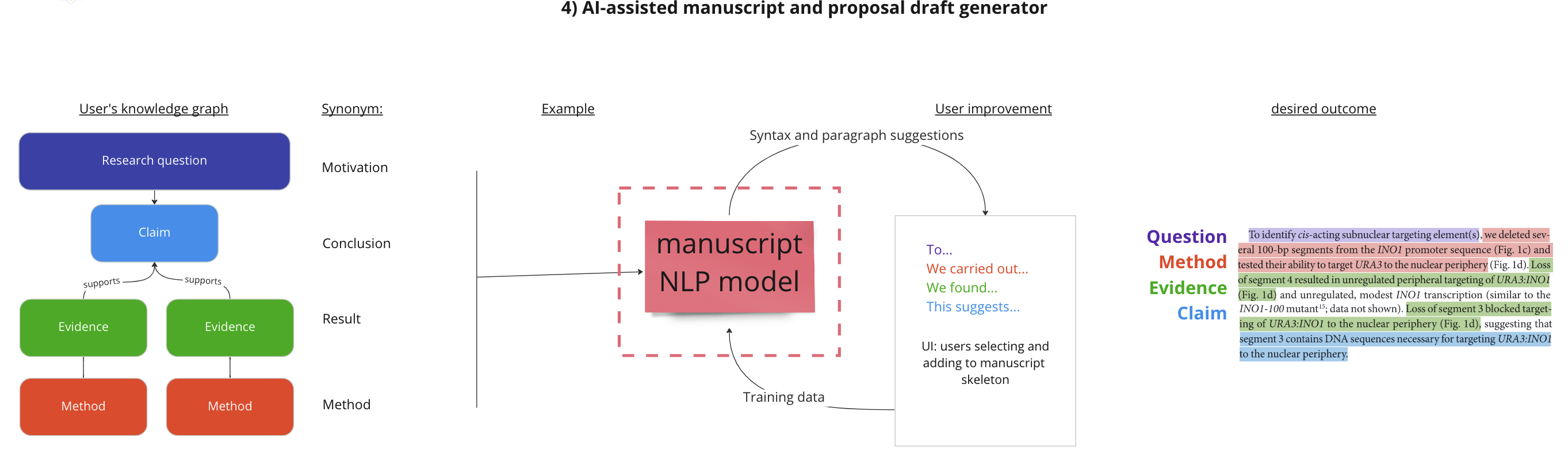

We'd like to build a prototype of a tool that starts with discourse units (phrases with the category Question/motivation, Method, Evidence, Claim) and uses NLP to generate a draft of a manuscript paragraph.

Purpose

Such a tool would speed up a really time consuming aspect of the academic job: drafting grant proposals and manuscripts from content. It would also encourage researchers to generate structured content (questions/claims/evidence) which will get incorporated into a discourse graph.

The benefit to the user is a structured approach to writing papers and grant proposals. Using the tool will introduce researchers to the concept of discourse units, and generate a repository of discourse units that can be turned into discourse graphs.

Ideal outcomes

(more to add here)

This tool could also enable the micropublication of mini discourse graphs (one question/method/evidence/claim) by generating a draft of the explanatory text.

- Could also integrate into Roam Research graphs, e.g. in Matt's lab, via the Roam GPT-3 extension

What we're doing next

- Belinda could mock something up in OpenAI playground really quickly for a few papers

- need examples or access to repo

- Matt and Michael will share some examples of papers or content from the discourse graph to use as source data

- Sid can check out Roam AI extension for incorporation into our roam graph workflow (fork, make modifications etc)

Related conversation

- Dafna: "compiling" discourse graph to manuscript seems much easier, esp. if have consistent structure and human-in-the-loop

- Similar to brainstorming discussion/ethics statements for a paper given abstract (via GPT-3

- Belinda: how much variation in paper structure within your field?

- some variations by journal

Claims in the conversation that need evidence

- the majority of empirical research papers in biology have a similar structure (question/ motivation/ evidence (fig.1a)/ claim for each paragraph & figure panel)

- multiple researchers (or students) asked to highlight the questions/claims/evidence text from a paper will highlight similar/consensus text (part of the NLP-to-highlights project)

notes/questions

- may need more than just the sentences, but also mapping to intra-project/paper elements, like figures and tables to

Next Steps

- don't know if a joint project makes sense, but perhaps coordinated first prototypes of a bridge?

could use:

- someone with programming skills to implement a POC translation between a discourse graph and one of the specific modeling languages/ontologies